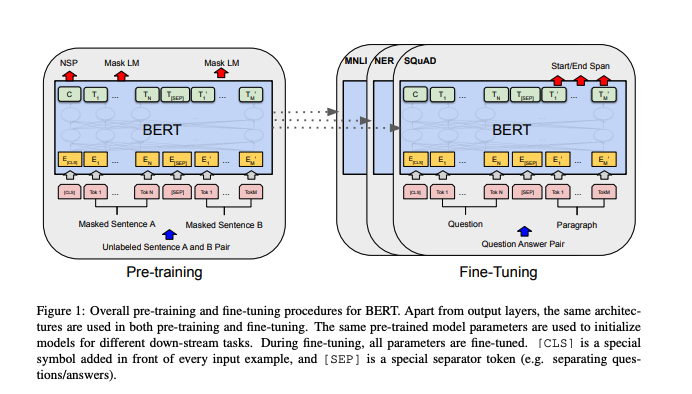

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

10 Things You Need to Know About BERT and the Transformer Architecture That Are Reshaping the AI Landscape - neptune.ai